Sentiment Analysis for content writers (AI tool)

Company

Kentico software, s.r.o.

Segment

B2B · SaaS · Content Management System (CMS) · DXP (digital experience platform)

Timestamp

2021

My role

problem definition challenger ?, interaction designer, visual designer

Achievements

a big help for business (=thanks to this implementation our product will likely be shortlisted by customers)

Challenges

a feature-focus mindset of building a product while not addressing user needs

Not only content writers rely more and more on everyday help like checking the grammar or tone of the text by AI.

While there are certainly many ways how to integrate existing tools into the software of choice, having a built-in tool and becoming more independent and truly all-in-one. Mainly in the eyes of big analyst companies that might get you some great deals long term. ?

Voicing potential problems

Can this really solve any problem?

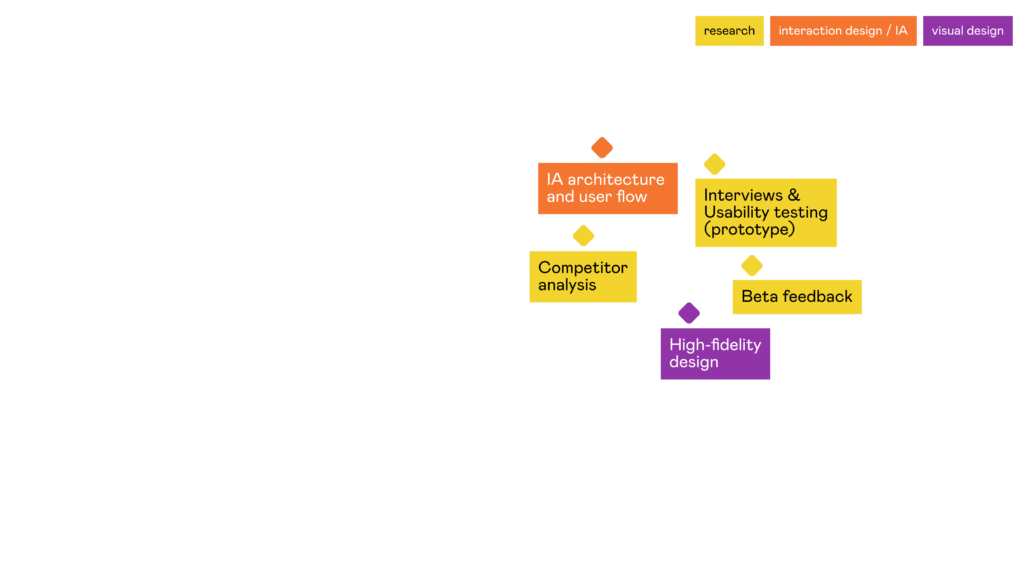

As user research wasn’t done by PM before deciding, due to time pressure I did a quick survey with internal Kentico users and other personas that might be users for this feature (content writers) about using such a tool.

The conversations were mainly in Czech, but the red parts mean that they wouldn’t trust the tool we suggest ?.

It was decided that we should proceed with the idea because the business would benefit from the feature long-term (as a “checklist feature”) and a technical solution would take weeks (not months).

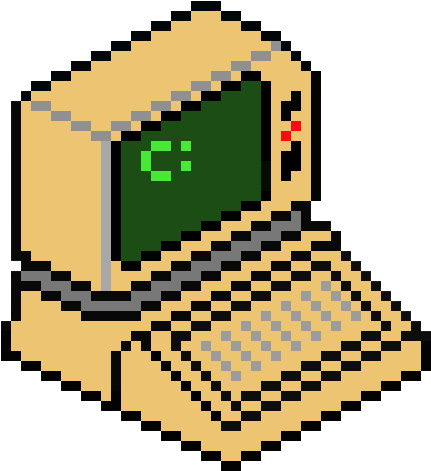

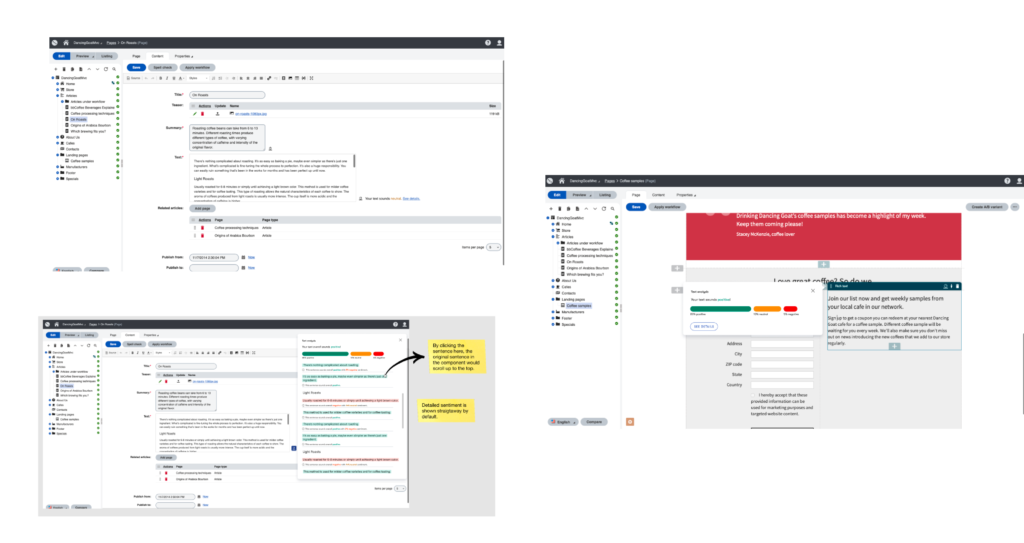

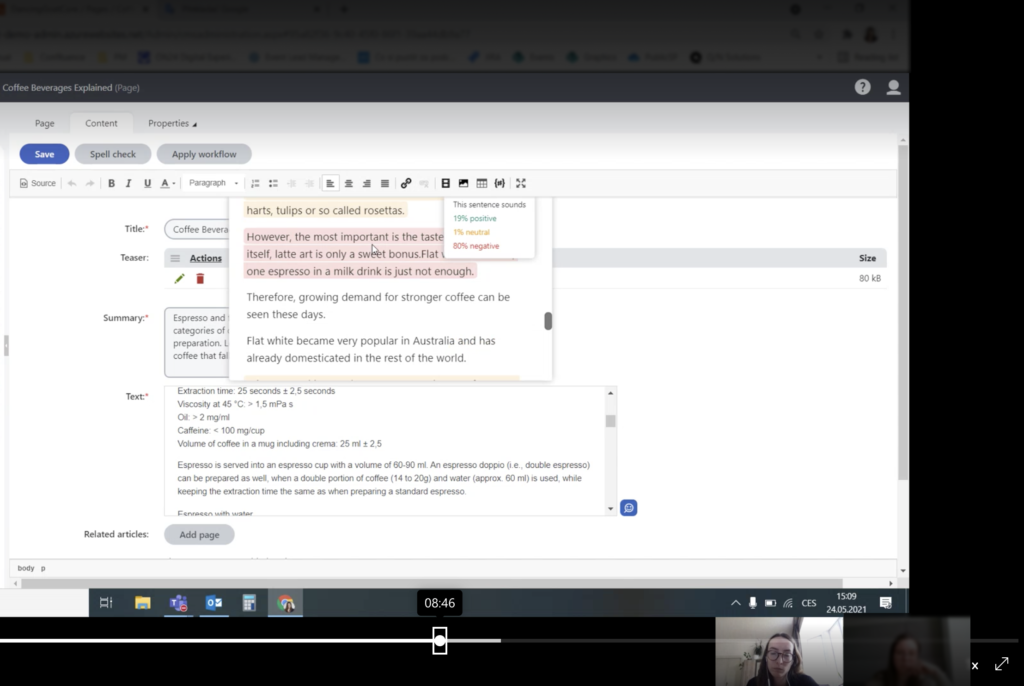

Design iterations

Trying different approaches and refining results with PM and team to deliver the most suitable experience in the short time frame.

Designing the flows

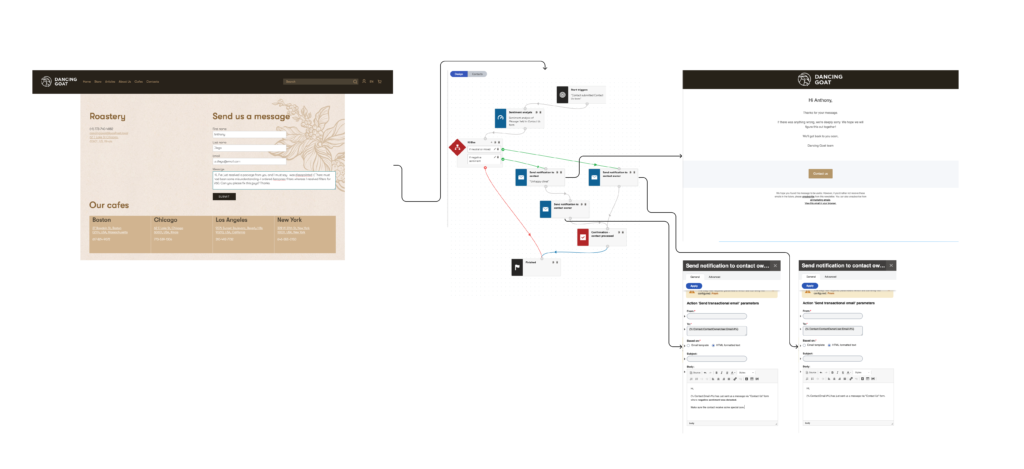

Users might use the tool not only for checking their text sentiment but also for reacting quickly when a customer sends a message or comment with a disappointed meaning.

When a contact sends a negative message, AI detects the sentiment of it, the marketing automation process is triggered and the respective contact automatically receives a Sorry message.

What do users say?

? Usability testing didn’t reveal major usability issues but revealed missing usefulness ? (demonstrating the mistrust of the results etc).

? Product brief included problem definition and description of user needs but were not based on data (technical POC was tested but not if it’s useful to users)

? What went well

? Thanks to the survey at the beginning of the process and asking more questions during usability testing, I filled in the gaps and found out about serious problems before it might be too late.

? Lessons learned

? Building stuff that might help us with business is OK. But If we’re not building a feature to solve user problems, we need to define other ways to measure success – e.g. Sales or Marketing metrics.

? Be persistent. Great design often requires a great amount of effort into communicating. Especially, when the feature doesn’t count with users!

? Challenging decisions from the beginning. When PM brought the brief to the table without data to back him, and without metrics to measure after we implement it, it was needed to challenge it and offer help if possible.

? Ownership is important. It’s important to agree on ownership and decision-maker.

Want to see my next project?

✨ Back to Work ✨